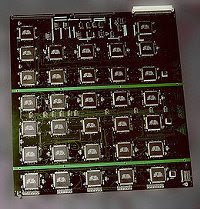

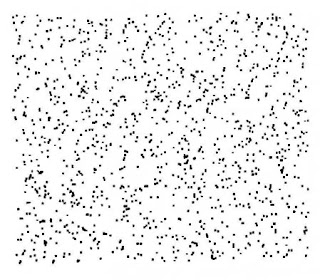

People are just as bad a determining whether an existing sequence is random. Look closely at the following two images.

Which one do you think looks more randomly distributed? If you read the article that the images link to, you already know that most people say the first image looks more random because the points are more smoothly distributed. This is exactly the property that makes it less random than the second image, which has more instances of points in tight clusters. A real set of random data points wouldn't be as evenly spaced as the first image.

Computers are much better than people, but by no means perfect, at generating sequences of random numbers. A number or sequence is said to be random if it is generated by a non-deterministic and unpredictable process. Since computers are inherently deterministic machines, this means that no computer can ever algorithmically generate a sequence that is truly random. They can come very close, though, with the help of a class of algorithms known as pseudo-random number generators (PRNG).

For a programmer writing a PRNG, or software that relies on one, testing its randomness reveals a unique problem. Unit testing software compares the output of a function to an expected value. If the output is expected to be unpredictable, you have a testing dilemma.

To make matters worse, there is no way even theoretically to prove that a sequence was generated randomly. Luckily there are statistical methods that are useful for revealing when a sequence is not random. Let's take a look at a simple PRNG, then use a common statistical method, the Monte Carlo method, to compare its effectiveness to a standard software library implementation.

A Naive PRNG

One of the oldest algorithmic methods for generating pseudorandom numbers is called the linear congruential method. Here's a simple example implementation:

public class NaiveRandom {

private int x; // seed

private int m = 134456; // modulus

private int a = 8121; // multiplier

private int c = 28411; // increment

public NaiveRandom()

{

x = 0;

}

public NaiveRandom(int seed)

{

this.x = seed;

}

public double next()

{

x = (x * a + c) % m;

return x / (double)m;

}

}

The original pseudocode and constants in the code above come from an example given in Statistical Mechanics by Werner Krauth. As Krauth explains in his book, these values are good for studying the algorithm, but not great if you need really random values. In the following section we'll see how it compares to Java's built-in PRNG.

The Monte Carlo Pi Program

Take a unit circle inscribed in a square.

The area of a circle is given by the formula

A = πr2

Since the radius is 1, the area is equal to π. Since the diameter of the circle and the length of a side of the square are both 2, the area of the square is 4. The ratio (which we'll call ρ) of the area of the circle to the area of the square is

ρ = π / 4 = 0.785398164

If we select a random sample of points from the square, the proportion of those points lying inside the circle should be equal to ρ. We can multiply this proportion by 4 for comparison to a library value of π. If the random number generator is close to truly random, then the closer our approximation should be to the actual value of π.

We can simplify the calculations necessary for the test if we only concern ourselves with one quadrant of the square. This is possible because it's the proportion of points inside the circle to those inside the square that matters. The proportion of such points is the same in each quadrant as it is in the whole. If we say that the center of the circle is at point (0, 0), then we need only generate random points (x, y) where x and y are both between 0.0 and 1.0. This is the standard output range for most PRNGs, like Java's Random class. Points that lie within the circle will be those that obey the inequality

x2 + y2 ≤ 1

Here is the Java code for a Monte Carlo Pi simulation.

import java.util.Date;

import java.util.Random;

public class MonteCarloPi {

public static void main(String[] args) {

// seed for NaiveRandom

Date now = new Date();

int seconds = (int)now.getTime();

// create random number generators

NaiveRandom nrand = new NaiveRandom(seconds);

Random rand = new Random();

// total number of sample points to take

int numPoints = 10000;

int inNaiveCircle = 0;

double xn, yn, zn;

// xn and yn will be the random point

// zn will be the calculated distance to the center

int inRandCircle = 0;

double xr, yr, zr;

// xr and yr will be the random point

// zr will be the calculated distance to the center

for(int i=0; i < numPoints; ++i)

{

xn = nrand.next();

yn = nrand.next();

xr = rand.nextDouble();

yr = rand.nextDouble();

zn = (xn * xn) + (yn * yn);

if(zn <= 1.0)

inNaiveCircle++;

zr = (xr * xr) + (yr * yr);

if(zr <= 1.0)

inRandCircle++;

}

// calculate the Pi approximations

double naivePi = approxPi(inNaiveCircle, numPoints);

double randomPi = approxPi(inRandCircle, numPoints);

// calculate the % error

double naiveError = calcError(naivePi);

double randomError = calcError(randomPi);

System.out.println("Naive Pi Approximation: " +

naivePi + ", Error: " + naiveError);

System.out.println("Random Pi Approximation: " +

randomPi + ", Error: " + randomError);

}

static double approxPi(int inCircle, int totalPoints)

{

return (double)inCircle / totalPoints * 4.0;

}

static double calcError(double pi)

{

return (pi - Math.PI)/Math.PI * 100;

}

}

Results

Run the simulator several times and you will see that Java's built-in PRNG seems to outperform the naive implementation, but not by much. Neither performs particularly well, but Monte Carlo simulations are only expected to be close, not exact. Here are my results after ten runs of the simulation.

| Naive Random | Java Random | ||

| Pi | Error(%) | Pi | Error(%) |

| 3.122 | -0.6237 | 3.1308 | -0.3435 |

| 3.1516 | 0.3185 | 3.1332 | -0.2671 |

| 3.1272 | -0.4581 | 3.1352 | -0.2035 |

| 3.1692 | 0.8788 | 3.1352 | -0.2035 |

| 3.1524 | 0.3440 | 3.1416 | 0.0002 |

| 3.1168 | -0.7892 | 3.1340 | -0.2417 |

| 3.1724 | 0.9806 | 3.1040 | -1.1966 |

| 3.1612 | 0.6241 | 3.1244 | -0.5473 |

| 3.1448 | 0.1021 | 3.1428 | 0.0384 |

| 3.1052 | -1.158 | 3.1628 | 0.6751 |

At least a small part of the imprecision of these results can be attributed to the fact that I only took 10,000 random points in each sample. A rule of thumb in Monte Carlo simulations is that for every 100X increase in data points, you'll get a 10X increase in the precision of your results. Since I took only 10,000 random points (100 * 100), I only got 2 digits of precision, with the third digit fluctuating.

Try increasing the number of random points to 1,000,000 and you should see that the third digit remains fixed over several runs of the program. The really interesting thing revealed is that our naive implementation continues to perform nearly as well as Java's built-in PRNG. This leads us to the conclusion (which can be easily verified) that Java's PRNG uses the same linear congruential method for generating random numbers as our naive implementation. The only significant difference between the two is that Java's implementation will be able to generate much longer sequences of random numbers before it starts to repeat itself.

Further Investigation

As I mentioned before, there are many statistical tests that can be used to measure the relative randomness of a sequence. I've only covered one of those tests in detail, but there are libraries available like the Diehard Battery of Tests of Randomness and ENT that include a wide variety of tests, and guidelines for interpreting them. If your application depends on randomness, I recommend evaluating your random number generator with one of these test suites.