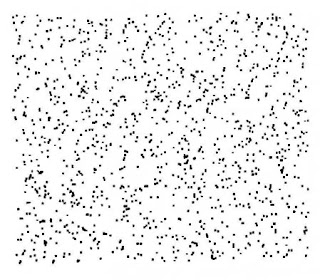

People are just as bad a determining whether an existing sequence is random. Look closely at the following two images.

Which one do you think looks more randomly distributed? If you read the article that the images link to, you already know that most people say the first image looks more random because the points are more smoothly distributed. This is exactly the property that makes it less random than the second image, which has more instances of points in tight clusters. A real set of random data points wouldn't be as evenly spaced as the first image.

Computers are much better than people, but by no means perfect, at generating sequences of random numbers. A number or sequence is said to be random if it is generated by a non-deterministic and unpredictable process. Since computers are inherently deterministic machines, this means that no computer can ever algorithmically generate a sequence that is truly random. They can come very close, though, with the help of a class of algorithms known as pseudo-random number generators (PRNG).

For a programmer writing a PRNG, or software that relies on one, testing its randomness reveals a unique problem. Unit testing software compares the output of a function to an expected value. If the output is expected to be unpredictable, you have a testing dilemma.

To make matters worse, there is no way even theoretically to prove that a sequence was generated randomly. Luckily there are statistical methods that are useful for revealing when a sequence is not random. Let's take a look at a simple PRNG, then use a common statistical method, the Monte Carlo method, to compare its effectiveness to a standard software library implementation.

A Naive PRNG

One of the oldest algorithmic methods for generating pseudorandom numbers is called the linear congruential method. Here's a simple example implementation:

public class NaiveRandom {

private int x; // seed

private int m = 134456; // modulus

private int a = 8121; // multiplier

private int c = 28411; // increment

public NaiveRandom()

{

x = 0;

}

public NaiveRandom(int seed)

{

this.x = seed;

}

public double next()

{

x = (x * a + c) % m;

return x / (double)m;

}

}

The original pseudocode and constants in the code above come from an example given in Statistical Mechanics by Werner Krauth. As Krauth explains in his book, these values are good for studying the algorithm, but not great if you need really random values. In the following section we'll see how it compares to Java's built-in PRNG.

The Monte Carlo Pi Program

Take a unit circle inscribed in a square.

The area of a circle is given by the formula

A = πr2

Since the radius is 1, the area is equal to π. Since the diameter of the circle and the length of a side of the square are both 2, the area of the square is 4. The ratio (which we'll call ρ) of the area of the circle to the area of the square is

ρ = π / 4 = 0.785398164

If we select a random sample of points from the square, the proportion of those points lying inside the circle should be equal to ρ. We can multiply this proportion by 4 for comparison to a library value of π. If the random number generator is close to truly random, then the closer our approximation should be to the actual value of π.

We can simplify the calculations necessary for the test if we only concern ourselves with one quadrant of the square. This is possible because it's the proportion of points inside the circle to those inside the square that matters. The proportion of such points is the same in each quadrant as it is in the whole. If we say that the center of the circle is at point (0, 0), then we need only generate random points (x, y) where x and y are both between 0.0 and 1.0. This is the standard output range for most PRNGs, like Java's Random class. Points that lie within the circle will be those that obey the inequality

x2 + y2 ≤ 1

Here is the Java code for a Monte Carlo Pi simulation.

import java.util.Date;

import java.util.Random;

public class MonteCarloPi {

public static void main(String[] args) {

// seed for NaiveRandom

Date now = new Date();

int seconds = (int)now.getTime();

// create random number generators

NaiveRandom nrand = new NaiveRandom(seconds);

Random rand = new Random();

// total number of sample points to take

int numPoints = 10000;

int inNaiveCircle = 0;

double xn, yn, zn;

// xn and yn will be the random point

// zn will be the calculated distance to the center

int inRandCircle = 0;

double xr, yr, zr;

// xr and yr will be the random point

// zr will be the calculated distance to the center

for(int i=0; i < numPoints; ++i)

{

xn = nrand.next();

yn = nrand.next();

xr = rand.nextDouble();

yr = rand.nextDouble();

zn = (xn * xn) + (yn * yn);

if(zn <= 1.0)

inNaiveCircle++;

zr = (xr * xr) + (yr * yr);

if(zr <= 1.0)

inRandCircle++;

}

// calculate the Pi approximations

double naivePi = approxPi(inNaiveCircle, numPoints);

double randomPi = approxPi(inRandCircle, numPoints);

// calculate the % error

double naiveError = calcError(naivePi);

double randomError = calcError(randomPi);

System.out.println("Naive Pi Approximation: " +

naivePi + ", Error: " + naiveError);

System.out.println("Random Pi Approximation: " +

randomPi + ", Error: " + randomError);

}

static double approxPi(int inCircle, int totalPoints)

{

return (double)inCircle / totalPoints * 4.0;

}

static double calcError(double pi)

{

return (pi - Math.PI)/Math.PI * 100;

}

}

Results

Run the simulator several times and you will see that Java's built-in PRNG seems to outperform the naive implementation, but not by much. Neither performs particularly well, but Monte Carlo simulations are only expected to be close, not exact. Here are my results after ten runs of the simulation.

| Naive Random | Java Random | ||

| Pi | Error(%) | Pi | Error(%) |

| 3.122 | -0.6237 | 3.1308 | -0.3435 |

| 3.1516 | 0.3185 | 3.1332 | -0.2671 |

| 3.1272 | -0.4581 | 3.1352 | -0.2035 |

| 3.1692 | 0.8788 | 3.1352 | -0.2035 |

| 3.1524 | 0.3440 | 3.1416 | 0.0002 |

| 3.1168 | -0.7892 | 3.1340 | -0.2417 |

| 3.1724 | 0.9806 | 3.1040 | -1.1966 |

| 3.1612 | 0.6241 | 3.1244 | -0.5473 |

| 3.1448 | 0.1021 | 3.1428 | 0.0384 |

| 3.1052 | -1.158 | 3.1628 | 0.6751 |

At least a small part of the imprecision of these results can be attributed to the fact that I only took 10,000 random points in each sample. A rule of thumb in Monte Carlo simulations is that for every 100X increase in data points, you'll get a 10X increase in the precision of your results. Since I took only 10,000 random points (100 * 100), I only got 2 digits of precision, with the third digit fluctuating.

Try increasing the number of random points to 1,000,000 and you should see that the third digit remains fixed over several runs of the program. The really interesting thing revealed is that our naive implementation continues to perform nearly as well as Java's built-in PRNG. This leads us to the conclusion (which can be easily verified) that Java's PRNG uses the same linear congruential method for generating random numbers as our naive implementation. The only significant difference between the two is that Java's implementation will be able to generate much longer sequences of random numbers before it starts to repeat itself.

Further Investigation

As I mentioned before, there are many statistical tests that can be used to measure the relative randomness of a sequence. I've only covered one of those tests in detail, but there are libraries available like the Diehard Battery of Tests of Randomness and ENT that include a wide variety of tests, and guidelines for interpreting them. If your application depends on randomness, I recommend evaluating your random number generator with one of these test suites.

15 comments:

How does SecureRandom do?

Tim,

Good suggestion. After 10 runs with 10,000 points each, the average errors(%) were:

NaiveRandom = 0.3555

JavaRandom = 0.3580

SecureRandom = 0.0041

I also did 10 runs with 1,000,000 points and got average errors of:

NaiveRandom = 0.0203

JavaRandom = 0.0300

SecureRandom = -0.0010

I wish I had thought of including SecureRandom before. I was peripherally aware that it existed, but having never used it for anything I completely forgot about it. :(

There are ways to generate truly random numbers using white noise from someones sound card. I have heard of this method in various places, its said that its a pretty good source of entropy. What are your thoughts on this?

Sam152,

Good question. There are very good source of entropy in your hardware, but I think they may be overkill for most applications. The obvious drawback is that hardware sources of randomness are inherently slower than software solutions, but if you're considering a white noise source I assume you've already accepted that.

The way the white noise sources that I've read of work is that you tune a radio to an unused frequency and point the radio at your microphone. Your soundcard digitizes the white noise and uses it as a source of random numbers. This seems very foolproof, but there is an ingenious attack vector. If an attacker discovers what radio frequency you're using as your source, they can begin broadcasting non-random "noise" on that frequency, influencing the sequence of random numbers generated in your application.

Now you may be saying that that is a completely unreasonable attack, and that no one would ever go to those lengths to crack your application. My point is that if you're not that paranoid, then a cryptographically secure pseudo-random number generator (a software solution) should be secure enough.

On the other hand, maybe you're writing an online poker site and you really are that paranoid (and rightfully so). If that's the case, I'd say test the hell out of whatever source you use to make sure it's random enough for your particular application.

That's a great insight. Really loving your blog by the way.

Keep it up.

Sam152,

Thanks for reading.

Interesting article, and I think I will write my own soon too on a similar subject.

As for hardware random number generators: They are not really too slow. Sure, if you use your sound card or another low-yield source of entropy you will be pretty limited. Also because you are misusing a piece of hardware that wasn;t designed to do what you try. Remember that you only get one or two bits of noise per sample which isn't exactly ideal.

There is specialized hardware for that sort of thing which can yield much greater speeds, up to above 100 MiB/s. Those things usually come as PCI cards.

The main problem with naïve hardware random number generation (like from the sound card or a webcam; but in general for all things concerning RNGs built by people with little understanding [yes, that includes me]) is that the results aren't optimal and in some cases even disastrously bad.

Case in point: Noise from a webcam or sound card (or any other wire) is biased. Depending on the temperature of the circuit. So your numbers will follow a different distribution in summer than in winter. Not ideal.

Much thought concerning hardware random number generators goes in exactly this topic: How to eliminate bias. Usually it's necessary to post-process the numbers obtained to get a uniform distribution (which is most of the time the desired outcome and can be transformed into other distributions, such as normal or gamma distributions easily).

I found a nice overview of some hardware RNGs along with some or their stats (such as speed) here. I don't think it's exhaustive and probably there are even faster devices by now. The prices usually also forbid using them for personal purposes :-)

Some people also came up with ingenious ways of generating numbers such as the dice generator and its (very) big brother, the Dice-O-Matic mark II. Of course, you only build such a beast if you need many dice rolls.

Although I'm not exactly sure on the effect of wear on the dice. Casinos for example are obliged to renew dice every now and then and casino dice also have no rounded edges to minimize skewing the distribution. This device is therefore probably not usably for, say, an online poker site (they have very stringent requirements for the random numbers they use).

On another note, the debate of PRNG vs. »true« RNG is also pretty pointless at times, as the debating people tend to ignore a very important fact about random number generation: Different uses have different requirements.

»True« random numbers are suitable for things like key generation, seeding cryptographically-secure PRNGs, &c.

Pseudo-random numbers are a must for simulations, where you need the ability to exactly reproduce the results of a simulation run. Also, since your results may be just an artifact of the PRNG used be sure to repeat the experiment with at least another PRNG of a different »family« (linear congruential generators, for example, are nearly unsuitable for simulations. But you shouldn't do the experiment with the Mersenne Twister and a WELL generator too, since both use a very similar underlying algorithm [in fact, WELL is an improvement on MT19937 by the original authors).

And then there are quasi-random numbers. The very first picture in this article details such numbers. They are designed not to be »random« but rather well-distributed. As such they are very suitable for Monte-Carlo simulations where a uniform coverage of the space is desirable without »clumps« or »holes« as seen in the second picture of the article.

So for your Pi example a quasi-random number generator might actually be the option yielding the best results.

I will be picky and assert that neither of the data sets depicted are "more random" then the other. This is like saying that 13 is more random than 100. Ignoring the fact that they represent finite samples from theoretical distributions, what you are really talking about are differences in distribution and what quasi-random number types call discrepancy.

Will,

The first thing I asked about the two images was, "Which one do you think looks more randomly distributed?" (Emphasis added.)

That's quite a bit different than asking if 13 is more random than 100, actually. One single data point can't be measured for randomness. A set of points certainly can.

I skipped over a lot of the fundamental tests that you would normally do in order to get to the Monte Carlo Pi program that I wanted to present. You'd want to do range, mean, variance, and bucket tests first, but I thought this program was a neat trick. If you really want to be picky, it would probably be easier to talk about these glaring omissions on my part, rather than split hairs about the difference between the phrases "more random" and "more randomly distributed."

Bill, thanks for sharing this. I wrote a small fiddle to test various implementation of PRNG for JavaScript. Sharing it here, just in case someone would want to play with it :)

I think I would use white noise combined with a PRNG. I would pick the ones & zeros out of the ambient noise in a room. Even if someone could control the noise in my room, the numbers would picked out in such away that they would never be able to influence the noise enough. If you needed more speed, this could be combined (e.g. like as the seed for handful of numbers) with a faster PRNG. Even using "slow" methods, a computer will make quick work of even the largest numbers.

Hi Bill,

I stumbled upon this post after writing something very similar on my own blog, and I was amused to recognized you SO username. Thanks for your very clear article.

I'd like to comment on a few points you made:

The obvious drawback is that hardware sources of randomness are inherently slower than software solutions, but if you're considering a white noise source I assume you've already accepted that.

Intel is currently launching a chip that might change this. Here's an article about this; basically, they are using coupled inverters forced into an inconsistent, and measuring how their wave function collapses to produce truly (quantum) random numbers, at a fascinating rate.

Also, I'd like to add to the debate launched by Will Dwinnell. I'm only speaking from my own understanding here, so please do correct me if I make mistakes, but the general ideas should be correct.

Deciding whether a single data point is random is at the core of the technique advised by the NIST for testing PRNGs. Given a sequence and a transformation (sum all bits, approximate pi, ...), you assess how likely a perfectly random source was to yield the same result (they call that the P-value). Finally, you reject the sequence as non-random if its p-value is lower than a predefined threshold, say 1% -- that is, if the probability of randomly obtaining the same result was below 0.01.

Finally, you repeat the experiment for many sequences, and verify that the rejection threshold is acceptably close to 0.01.

So by many tests, even asking which image was more random would have been perfectly acceptable ;)

I've written an article about testing pseudo-random number generators, though my hosting provider has some trouble these days. I would be honoured if you could perhaps have a look, and tell me what you think.

Again, thanks for this excellent post. You have a very nice writing style.

Cheers,

Clément.

I'm wondering why there is such a difference in error while computing PI using SecureRandom. It's just 10,000 points right? Wouldn't that mean that SecureRandom is more stratified? I mean more evenly distributed, which doesn't neccesarly mean it's more random? like quasi number generators for ex.

If you've been camping, then you know how important it is to have all the essentials for a safe and fun trip. This includes camping generators. rv inverter generator

The random number generator is like an emoji kitchen! 🍔🍩 Each number it produces is a unique "recipe" combining elements that may seem unrelated but come together beautifully, just like blending a burger and donut into an emoji fusion. The randomness ensures the combinations stay fun and unexpected, keeping things fresh every time! 🌟🎲 How do you think randomness plays into creativity, much like these quirky emoji combos?

Post a Comment